Databricks notebooks

Please be aware that the Databricks platform is regularly updated and may look different from the guidance included on this site. If you notice any discrepancies between the content on this site and the Databricks platform, please let us know by contacting statistics.development@education.gov.uk.

Notebooks

We recommend using the default .ipynb format for full retention of images and formatting included within the notebook. Notebooks can be saved as raw source files as .R or .SQL, but markdown chunks are saved as comments, which loses some of the formatting and any images you’ve included within the notebook for documentation.

Notebooks are a special kind of script that Databricks supports. They consist of code blocks and markdown blocks which can contain formatted text, links and images. Due to the ability to combine markdown with code they are very well suited to creating and documenting data pipelines, such as creating a core dataset that underpins your other products. They are particularly powerful when parameterised and used in conjuction with Workflows.

You can create a notebook in your workspace, either in a folder or a repository. To do this locate the folder / repository you want to create the notebook in then click the ‘Create’ button and select Notebook.

Any notebooks used for core business processes should be created in a repository linked to GitHub / DevOps where they can be version controlled.

Once you’ve created a notebook it will automatically be opened. Any changes you made are saved in real time so the notebook will always keep the latest version of its contents. In order to ‘save’ a snapshot of your work it is recommended to use Git commits.

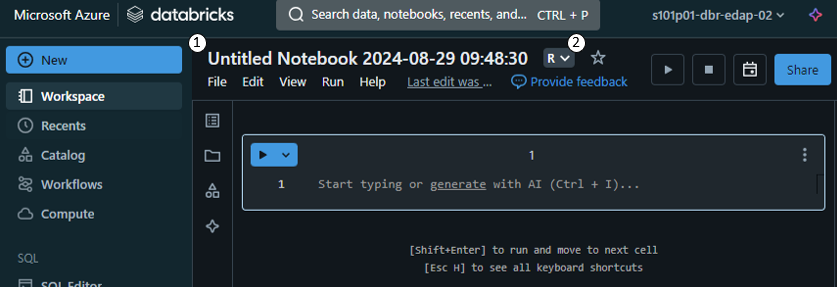

You can change the title from ‘Untitled Notebook <timestamp>’ (1), and set its default language in the drop down immediately to the right of the notebook title (2).

The default language is the language the notebook will assume all code chunks are written in. In the screenshot above the default language is ‘R’, so all chunks will be assumed to be written in R unless otherwise specified.

You can also add markdown cells to add text, links and graphics to your notebook in order to document the processing done within it.

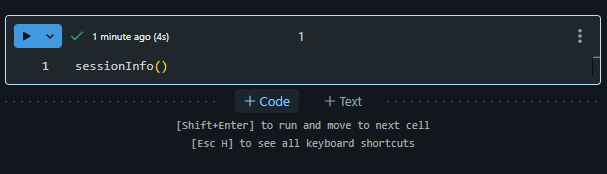

To add a new code of markdown chunk move the mouse above or below another chunk and the buttons ‘+Code’ and ‘+Text’ will appear.

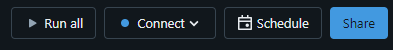

To run code chunks you’ll first need to attach your compute resource to it by clicking the ‘Connect’ button in the top right hand side of the page.

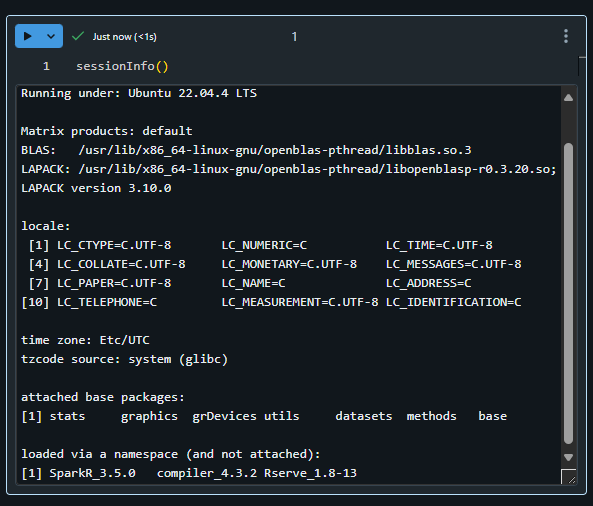

You can run a code chunk either by pressing the play button in the top left corner of the chunk, or by pressing Ctrl + Return/Enter on the keyboard. Any outputs that result from the code will be displayed underneath the chunk.

If you try to View() a data frame in R you’ll notice that the function doesn’t work within Databricks. Instead Databricks providers the display() function for R users to view their data with.

Everything ran in a notebook is in it’s own ‘session’ meaning that later chunks have access to variables, functions, etc. that were defined above. Chunks can be ran manually, however doing this runs the risk of running code out of order and may consequently produce unexpected results. To avoid this all chunks can be ran in order from the beginning of the Notebook using the ‘Run all’ button at the top of the page, alternatively you can ‘Run all above’ or ‘Run all below’ from any code chunk.

Notebooks cannot share a session with another Notebook so bear this in mind when constructing your workflows. If you need to pass data between notebooks it can be written out to a table in the unity catalog using SQL / R / Python / Scala as you would write to a SQL table in SQL Server. This can then be accessed from later notebooks.

Notebooks can be parameterised using ‘widgets’, meaning a single notebook can be re-used with different inputs. This means they can be used in a similar way to a function in R/Python or a stored procedure in SQL.

A coding best-practice is to build components that can be re-used to perform many similar tasks rather than writing repetitive code.

This applies equally to notebooks or scripts you create within Databricks which can be made re-usable through parameters. To reference a parameter within a notebook you can use the syntax :parameter_name from Databricks Runtime 15+. In previous DBR versions the syntax was ${parameter_name}.