Personal cluster with RStudio and sparklyr

Please be aware that the Databricks platform is regularly updated and may look different from the guidance included on this site. If you notice any discrepancies between the content on this site and the Databricks platform, please let us know by contacting statistics.development@education.gov.uk.

The following instructions set up a sparklyr connection between your laptop and your Databricks cluster, which can then be used in RStudio to query data using tidyverse syntax.

To use sparklyr you will need a personal cluster set up on Databricks. They can be used within the Databricks environment, or through RStudio. You can set one up yourself if you don’t have access to one already.

Within the Databricks environment they are able to use SQL, R, Python and Scala, and are more flexible than SQL Warehouses. From RStudio you will be able to use this method to execute queries using R with the performance benefits provided by Databricks.

Please note: This guidance should be followed if you wish to run R scripts from RStudio against data held against tables in Databricks, or if you wish to work with a file held in a Databricks volume. You can read more about volumes on our Databricks fundamentals page.

You can use data from Databricks with R code in two different ways:

- In scripts or notebooks via the Databricks environment

- In RStudio via an ODBC/

sparklyrconnection

Pre-requisites

You must have:

- Access to Databricks and the data you’ll be working with

- Access to a personal cluster on Databricks

- R and RStudio downloaded and installed on your laptop

- RTools installed on your laptop (available from the Software Centre)

- Git installed on your laptop

- Membership of the active directory group ‘AZURE INTERNET EXCLUDE INSPECTION PA’

- Currently this involves logging an ‘Access to Restricted Group’ IT ticket through the ServiceNow portal

Compute resources

When your data is moved to Databricks, it will be stored in the Unity Catalog and you will need to use a compute resource to access it from other software such as RStudio.

A compute resource allows you to run your code using cloud computing power instead of using your laptop’s processing power. This means that using compute resources can allow your code to run faster than it would if you ran it locally, as it is like using the processing resources of multiple computers at once. On this page, we will be referring to the use of personal clusters as the compute resource to run your code.

Personal clusters

A personal cluster is a compute resource that supports the use of multiple code languages (R, SQL, Scala and Python) in the Databricks environment and can be set up to connect to RStudio as well. You can create your own personal cluster within the Databricks interface.

When you set up your personal cluster, you will be asked to select a runtime for that cluster. Different runtimes allow you to use different features and package versions. Certain packages are installed by default on a personal cluster and do not need to be installed manually. The specific packages installed are based on the Databricks Runtime (DBR) version your cluster is set up with. A comprehensive list of packages included in each DBR is available in the Databricks documentation. Generally speaking a higher DBR version number will provide more functionality.

Compute resources, including personal clusters, have no storage of their own. This means that if you install libraries or packages onto a cluster they will only remain installed until the cluster is stopped. Once re-started those libraries will need to be installed again.

An alternative to this is to specify packages / libraries to be installed on the cluster at start up. To do this click the name of your cluster from the ‘Compute’ page, then go to the ‘Libraries’ tab and click the ‘Install new’ button.

Use of compute resources are charged by the hour, and so personal clusters have been set to shut down after being unused for an hour in order to prevent unnecessary cost to the Department.

Process

There are four steps to complete before your connection can be established. These are:

- Creating a personal compute resource (if you do not already have one)

- Installing the

reticulate,pysparklyrandsparklyrpackages - Modifying your .Renviron file to establish a connection between RStudio and Databricks

- Adding connection code to your existing scripts in RStudio

Creating a personal compute resource

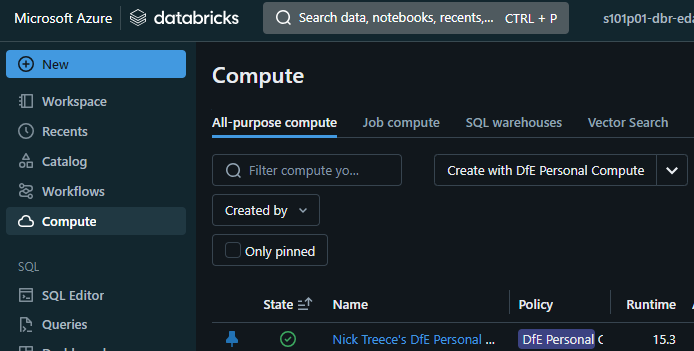

- To create your own personal compute resource click the ‘Create with DfE Personal Compute’ button on the compute page

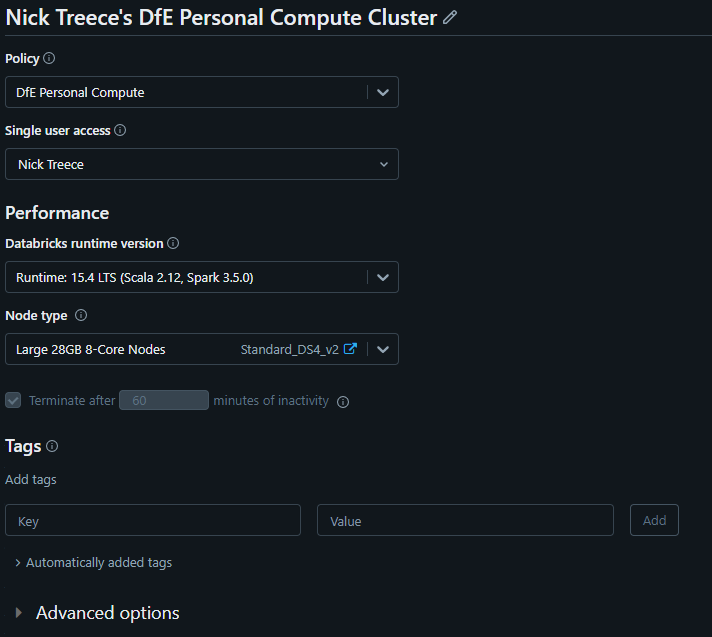

You’ll then be presented with a screen to configure the cluster. There are 2 options here under the performance section which you will want to pay attention to; Databricks runtime version, and Node type

Databricks runtime version - This is the version of the Databricks software that will be present on your compute resource. Generally it is recommended you go with the latest LTS (long term support) version. At the time of writing this is ‘15.4 LTS’

Node type - This option determines how powerful your cluster is and there are 2 options available by default:

- Standard 14GB 4-Core Nodes

- Large 28GB 8-Core Nodes

If you require a larger personal cluster this can be requested by the ADA team.

- Standard 14GB 4-Core Nodes

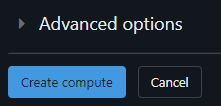

Click the ‘Create compute’ button at the bottom of the page. This will create your personal cluster and begin starting it up. This usually takes around 5 minutes

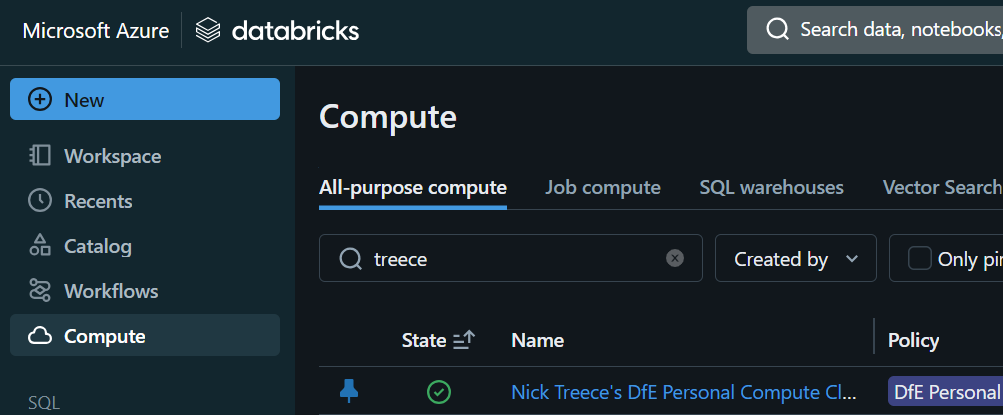

Once the cluster is up and running the icon under the ‘State’ header on the ‘Compute’ page will appear as a green tick

Setting up the ODBC driver

If you have previously set up an ODBC connection, or followed the set up Databricks SQL Warehouse with RStudio guidance, then you can skip this step.

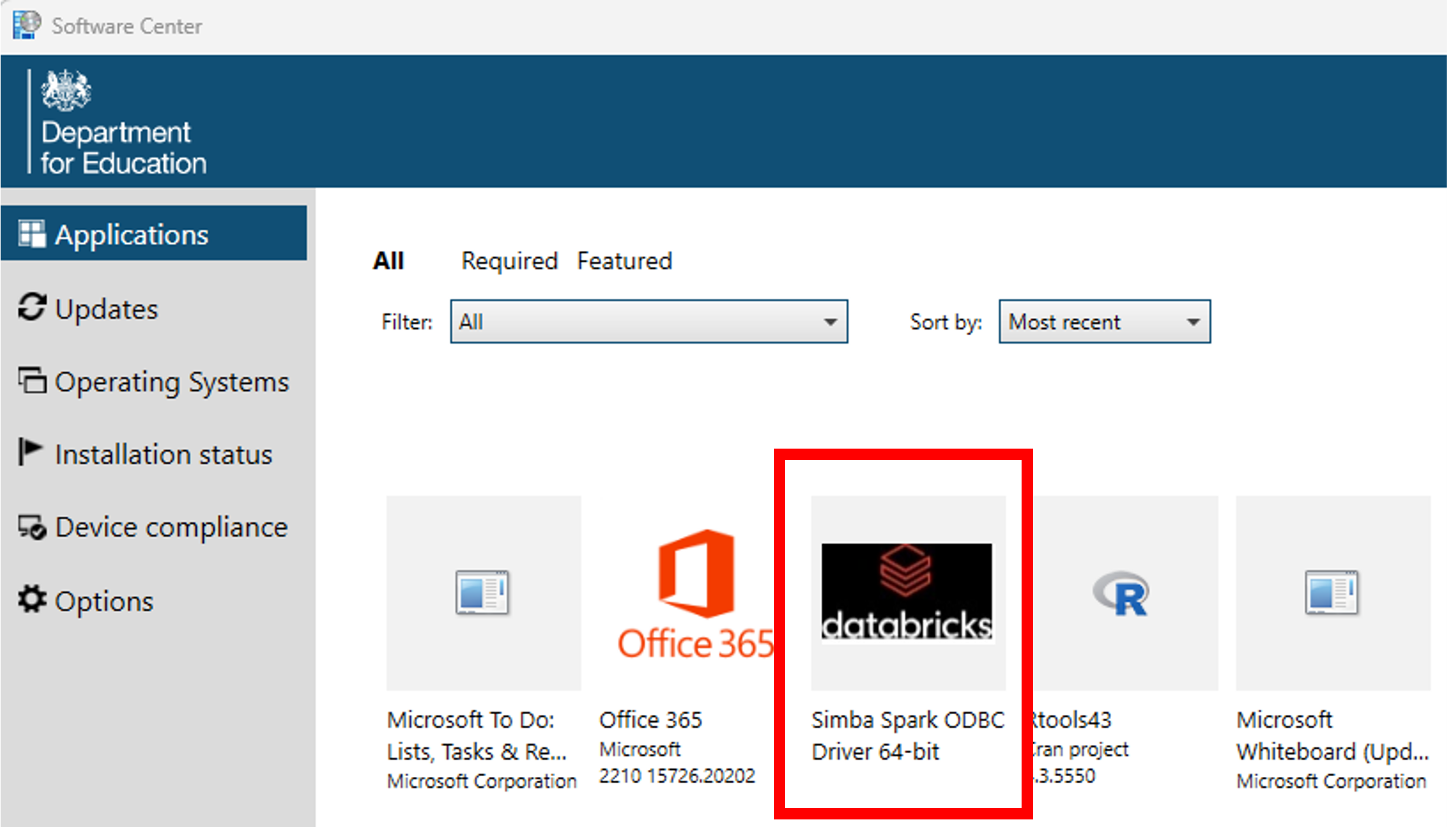

Open the Software Centre via the start menu

In the ‘Applications’ tab, click

Simba Spark ODBC Driver 64-bit

- Click install

Establishing an RStudio connection using environment variables

The sparklyr package in RStudio allows you to connect to Databricks by creating and modifying three environment variables in your .Renviron file.

If you have previously established a connection between a SQL Warehouse or personal cluster and RStudio, then some of these variables will already be in your .Renviron file.

To set the environment variables, call usethis::edit_r_environ(). You will then need to enter the following information:

DATABRICKS_HOST = "databricks-host"

DATABRICKS_CLUSTER_ID = "databricks-cluster-id"

DATABRICKS_TOKEN = "personal-access-token"Once you have entered the details, save and close your .Renviron file and restart R (Session > Restart R).

Everyone in your team that wishes to connect to the data in Databricks and run your code must set up their .Renviron file individually, otherwise their connection will fail.

The sections below describe where to find the information needed for each of the environment variables.

Databricks host

The Databricks host is the instance of Databricks that you want to connect to. It’s the URL that you see in your browser bar when you’re on the Databricks site and should end in “azuredatabricks.net” (ignore anything after this section of the URL).

Do not include the / at the end of the URL when you add it to the .Renviron file

Databricks cluster id

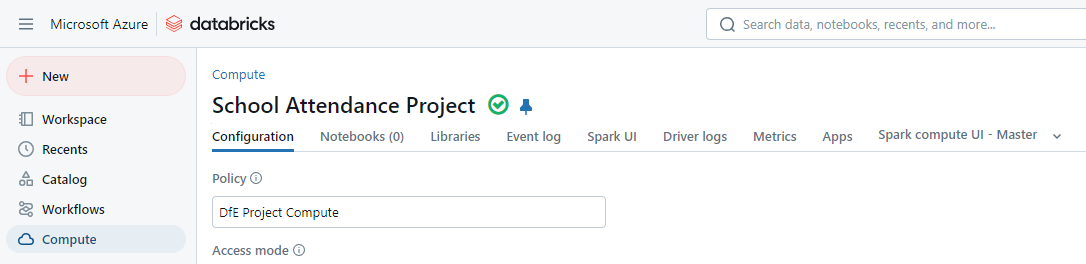

In Databricks, go to Compute in the left hand menu, and click on the name of your personal cluster:

On the Configuration tab, take the code between clusters/ and ? in the page URL. This is the ID for your personal cluster.

Databricks token

The Databricks token is a personal access token.

A personal access token is is a security measure that acts as an identifier to let Databricks know who is accessing information from the personal cluster. Access tokens are usually set for a limited amount of time, so they will need renewing periodically.

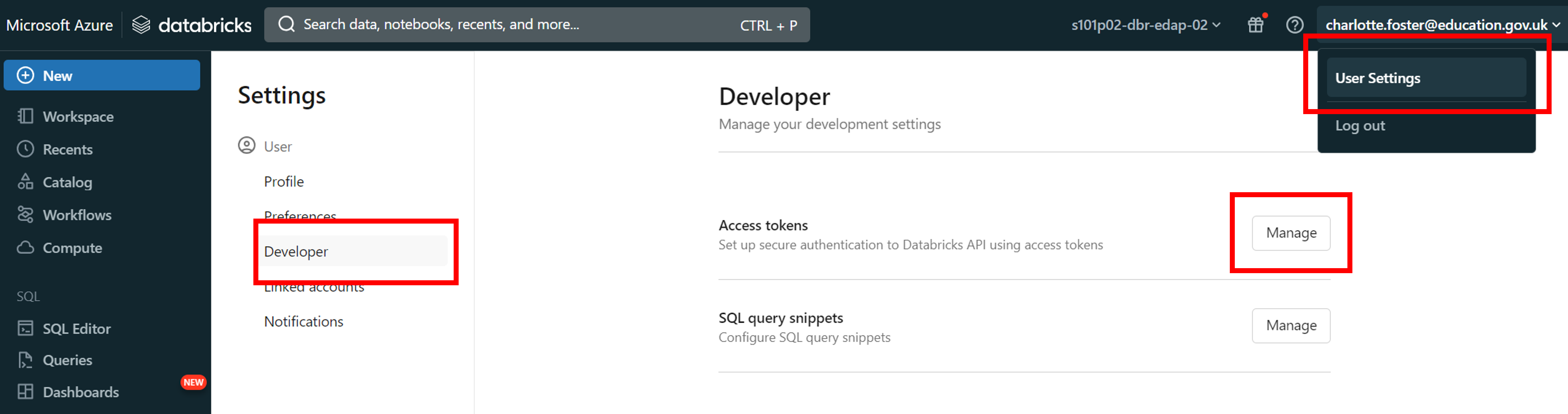

In Databricks, click on your email address in the top right corner, then click ‘User settings’

Go to the ‘Developer’ tab in the side bar. Next to ‘Access tokens’, click the ‘Manage’ button

Click the ‘Generate new token’ button

Name the token, then click ‘Generate’

Note that access tokens will only last as long as the value for the ‘Lifetime (days)’ field. After this period the token will expire, and you will need to create a new one to re-authenticate. Access tokens also expire if they are unused after 90 days. For this reason, we recommend setting the Lifetime value to be 90 days or less.

- Make a note of the ‘Databricks access token’ it has given you

It is very important that you immediately copy the access token that you are given, as you will not be able to see it through Databricks again. If you lose this access token before pasting it into RStudio then you must generate a new access token to replace it.

Setting up your sparklyr connection

To connect to Databricks through sparklyr you will first need to install other packages which it depends on. The following steps are only required once, and once you have successfully connected you will only need to use the code from the ‘Using sparklyr to access and navigate data’ section below.

Reticulate and python

Behind the scenes sparklyr converts your queries to python when interfacing with Databricks. The reticulate package is used for managing python environments through R. It can be installed using the following line of code.

install.packages("reticulate")You will also need to use reticulate to install a python environment in the background, the version of python you install should be higher than 3.10. The version is passed to the function as a string as below.

reticulate::install_python("3.10")This step can take quite a while to complete. If you get an error here please consult the ‘Troubleshooting’ section below.

Pysparklyr and sparklyr

The second dependency is pysparklyr. As part of the pysparklyr install it will also install sparklyr so there is no need to do this manually.

install.packages("pysparklyr")The installation may prompt you to update a number of packages. If this happens enter 1 (to update all packages) into the prompt.

In this step there is often an issue where the curl package will not update and it will restore the previous version. Unfortunately this will cause issues as pysparklyr depends on functions from a later version of curl.

If this occurs use the function remove_packages("curl") to manually uninstall it, then run install.packages("curl") to install the latest version.

Install databricks

The next step is to use the pysparklyr package to install_databricks(). This will install the necessary software to connect to the Databricks platform.

The installation is specific to the Databricks Runtime of your cluster, this means if you create a new cluster with a different DBR version you will need to run this function again to set up the new cluster.

pysparklyr::install_databricks(cluster_id = Sys.getenv("DATABRICKS_CLUSTER_ID"), as_job = F)This installation may take some time, but only needs to be done the first time you connect to a cluster.

Troubleshooting

Python won’t install

The most likely reason that python would fail to install is that you haven’t yet been granted access to the AD group ‘AZURE INTERNET EXCLUDE INSPECTION PA’ yet.

If this is the case you will likely see a long error message which will contain the text SSL: CERTIFICATE_VERIFY_FAILED (you may need to scroll across the error message to see this as it’s quite chunky).

To fix this issue submit an IT service desk ticket requesting access to the group above and retry once your access has been granted.

Using spark_connect() throws error: “‘curl_parse_url’ is not an exported object from namespace:curl”

This error appears when your version of the curl package is too old to have the functions that sparklyr depends on.

To uninstall the old version and update it use the following code and then re-run spark_connect().

remove.packages("curl")

install.packages("curl")Using spark_connect() throws error: “Databricks connect is not available in the current Python environment”

This error is usually caused by having another python environment already loaded in your R session.

To resolve this restart your R session (Session > Restart R) then re-run the spark_connect() function.

Queries hang when connecting to Databricks from RStudio on Azure Virtual Desktop

If your query runs for a long time and doesn’t return any results, check that you have a config.yml file.

The spark_connect() function requires this config file to run correctly. If you don’t have a config.yml file, create a blank one in your project folder, and your queries should then run correctly.

I’m having another problem with virtual environments

There are a number of issues that can arise when sparkylr creates virtual environments, and sometimes trying to work out what is wrong is quite difficult.

The easiest way to resolve the issue is usually to remove the virtual environments and let pysparklyr create them again with the install_databricks() function.

There are 2 methods of doing this, the first uses the reticulate package to view and remove them. First we can list what virtual environments exist using the code below.

reticulate::virtualenv_list()

#output: "r-sparklyr-databricks-16.1"We can then remove that environment

reticulate::virtualenv_remove("r-sparklyr-databricks-16.1")

#where "r-sparklyr-databricks-16.1" is what is returned from the code aboveOnce you’ve done this you can re-run pysparklyr::install_databricks() and it should recreate the environment.

If for some reason removing the environment through the command line doesn’t work we can delete them using file explorer. However, first we need to find where they are kept. We can do this with the following line of code which will print the full system path of any virtual environments that exist on your machine.

list.files(reticulate::virtualenv_root(), full.names = T)Once you have the path you can navigate to the .virtualenvs folder and manually delete the folder within it that corresponds to the name of the faulty environment. You can then re-run pysparklyr::install_databricks() and it will rebuild it from scratch.